The year

2015 is ending very shortly.

I wish

every readers of my blog an exciting new year ahead that is full of happiness

and prosperity.

From my

blog statistics, most of my visitors comes from the US and Europe. There is

negligible traffic coming from developing countries. This can be correlated to

the fact that exploitation of geo-spatial data in developing countries is still

in infant stage. On average, I tend to get around 600 visits on my blog, which is not a lot but its good to see that someone actually bothers to read the posts that i wrote. When i get an email from my visitors mentioning that some posts helped them to do things in their professional life, i am over the moon on that day :).

These are

the all-time top 10 posts within my blog that seems to be attracted to many

users. Some of the post that I have written in 2010-11 is still very popular

among visitors, specially related to ArcGIS. The post related to GIS gets more

hits than those related to Remote Sensing. I tend to write less related to GIS

posts as I don’t work with GIS day in and day out. I will write more related to Remote Sensing in

coming days. The top ten blogs post are related to MATLAB : 5 ARCGIS: 4 and eCognition: 1. I could not get the

posts specific statistics for 2014 from blogger but lately my eCognition

related blogs are liked by many visitors. I have made few friends through my

blogs which is awesome.

- KMLcreation using MATLAB

- Openingmultispectral or hyperspectral ENVI files in MATLAB

- Convertingraster dataset to XYZ in ARCGIS !!

- UtilizingNumpy to perform complex GIS operation in ARCGIS 10

- Data DrivenMap Book in ArcGIS 10

- ArcPy :Python scripting in ArcGIS 10

- MATLAB GUIfor 3D point generation from SR 4000 images

- MATLABtutorial: Dividing image into blocks and applying a function

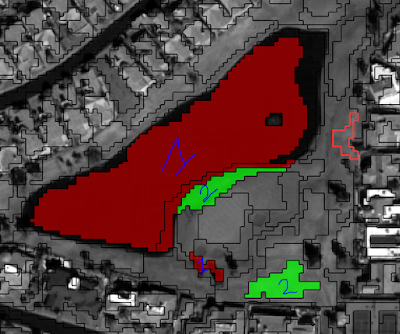

- MATLABTutorial: Finding center pivot irrigation fields in a high resolution image

- eCognitionTutorial: Finding trees and buildings from LiDAR with limited information